Big Data Hadoop Certification Training

Discover the depths of Big Data Hadoop Technology. Join us now to gain comprehensive insights into Big Data Hadoop Technology from a seasoned professional.

![]() 5.0 out of 5 based on 103 user reviews. |

5.0 out of 5 based on 103 user reviews. |  |

|  |

|  |

|

Enquire Now

Accreditations & Affiliations

Training Features

Best Big Data Hadoop Training Institute in Delhi NCR

Join Best Big Data Hadoop Online Training in Delhi, Big Data Hadoop Online Training Course in Delhi, Big Data Hadoop Online Training Institute in Noida

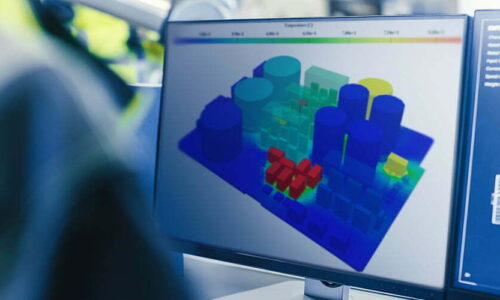

Big Data refers to a collection of large datasets that cannot be processed using conventional computing systems. It is not just data; instead, it has become a complete subject, which includes various tools, methods, and frameworks. As discussed in the Big Data training at CETPA Infotech - the best Big Data Training Institute in Delhi NCR, Big Data is a large chunk of raw data that is collected, stored, and analyzed through various means which can be utilized by organizations to increase their effectiveness and take better decisions.

Further, organizations are learning that important forecasts can be made by sorting through and analyzing Big Data. As more than 75% of this data is “unstructured”, it must be formatted in a way that makes it suitable for data mining and further analysis. In such a scenario, our Big Data Training in Delhi NCR introduces the platforms for structuring big data and resolving the problems of formatting it for subsequent analytics purposes. Big Data is an Apache open-source Java framework that uses straightforward programming concepts to enable the distributed processing of massive datasets across a network of computers. It provides enormous processing power, enormous storage for certain kinds of data, and the ability to manage almost infinite parallel processes or jobs.

Hadoop was invented by Doug Cutting, the creator of Apache Lucene, the most popular text search library. As per history, the major events that led to the creation of the stable version of Hadoop are as follows:

- 2003 - Google launched the project Nutch to handle billions of searches and index millions of web pages.

- Oct 2003 - Google released papers with GFS (Google File System).

- Dec 2004 - Google released papers with MapReduce

- 2005 - Nutch used GFS and MapReduce to perform operations.

- 2006 - Yahoo! created Hadoop based on GFS and MapReduce (with Doug Cutting and team)

- 2007 - Yahoo started using Hadoop on a 1000-node cluster.

- Jan 2008 - Apache took over Hadoop.

- Jul 2008 - Tested a 4000-node cluster with Hadoop successfully.

- 2009 - Hadoop successfully sorted a petabyte of data in less than 17 hours to handle billions of searches and index millions of web pages.

- Dec 2011 - Hadoop releases version 1.0.

- Aug 2013 - Version 2.0.6 was released.

This is the age of Hadoop. This signifies the availability of a plethora of job opportunities in the field of Hadoop. Some of the most renowned companies are even modernizing their search engines with the support of Hadoop Technology. As a result, they are looking forward to hiring more people who hold Hadoop skills to support the search process. Additionally, a few additional companies are looking for people with Open Stack work expertise, with Hadoop being one of the primary requirements.

Businesses are actively seeking candidates proficient in Big Data Hadoop Training for a variety of positions. These positions include:- Product Managers

- Database Administrators

- Engineers and Professionals with Operating Skills

- Software Testers

- Senior Hadoop Developers

- Team Leads

- Hadoop Developers

Further, individuals must enroll in our Big Data Hadoop Training in Noida as Big Data Hadoop is everywhere and it will provide aspirants with the following benefits:

- Better Career

- Better Salary

- Big Companies Hiring

- Better Job Opportunities

- Basic knowledge of Java and Linux.

- Understanding of Nodes and Cluster

- Good knowledge of Database, SQL

- Basic knowledge of Programming Languages: Java, Python

- Understanding the Architecture of the Hadoop System

CETPA is one of the most renowned institutes that offers Big Data Hadoop training. The institute boasts highly skilled and experienced professional experts to train students and make them ready for their professional careers. The curriculum of the Big Data Hadoop training offered by our institute is designed as per the latest industry standards. Hence, it is the best opportunity for students to join the Big Data Hadoop course to grasp the technical knowledge and open the doors to lucrative opportunities. Further, CETPA offers both online Big Data Hadoop training as well as classroom training with the best lab facilities.

Aspiring students choose CETPA Infotech for their journey of learning Big Data Hadoop due to the following features:

Students choose CETPA for its Big Data Hadoop training in comparison to other companies because of its following features:- CETPA provides a one-year membership card to every student.

- The institute boasts highly skilled trainers to train students in Big Data Hadoop training.

- We provide flexible slots for Big Data Hadoop training as per the demands of the students.

- 100% Placement Assistance after successful completion of Big Data Hadoop training.

- Six Months Industrial Training in Big Data Hadoop with expert and experienced faculty members.

- Best lab facilities and infrastructure to allow students to work on live projects.

INDUSTRIES USING BIG DATA HADOOP

The top companies using BIG DATA HADOOP are:- Yahoo ( One of the biggest user & more than 80% code contributor to Hadoop)

- Netflix

- Amazon

- Adobe

- EBay

- Alibaba

- IBM

These top-level companies demand professionals having expertise in Big Data Hadoop and reward them with competitive packages and good career growth. Hence, aspirants must join CETPA right away to pursue Big Data Hadoop training and fulfill their career demands.

CETPA is a reputed training institute well known for outstanding placements offered to students in top-notch companies. We boast a well-established placement and consultancy wing which gives good exposure to students to reputed companies. Enrolled students at our institute have the chance to work on real projects and have a 100% job placement track record. Further, our industry-recognized certifications offer students to grab the best opportunity from reputed companies. Nonetheless, we at CETPA, hold a value for money and help students develop their careers optimally. Some of the placements made by the company in recent times are:

| S.No | Student Name | Company Where Placed | Package |

|---|---|---|---|

| 1 | Vishwesh Mishra | Kites Techno World | 1.22 LPA |

| 2 | Mausam Suri | Kites Techno World | 1.22 LPA |

| 3 | Ankesh K Srivastav | Kites Techno World | 1.22 LPA |

| 4 | Sangita | EI Softwares | 1.2 LPA |

Hence, we may say that we at CETPA, train students to be industry-ready and this is reflected in our placements. Students willing to bag a good and exciting career must join CETPA right away for an outstanding experience. To check the countless number of placements made by CETPA please Click Here.

The various benefits of pursuing a Big Data course are listed below for further reference:

- You will get a better knowledge of programming and how to implement it for actual development requirements in industrial projects and applications.

- Enhanced knowledge of the web development framework. Using this framework, you can develop dynamic websites swiftly.

- You will learn how to design, develop, test, support, and deploy desktop, custom web, and mobile applications.

- Design and improve testing and maintenance activities and procedures.

- Design, implement, and develop important applications in a Big Data environment.

- Increased chances of working in leading software companies like Infosys, Wipro, Amazon, TCS, IBM, and many more.

Professional growth, increased compensation and validation of the skill are the most popular reasons why individuals and professionals seek IT certifications. Keeping this in mind, we at CETPA Infotech, offer you industry-recognized certifications in the latest and innovative technologies to help you reach your certification goals. The training courses offered at our institute are curated as per international standards and curriculum. This ensures that we are your best choice if looking for a reputed training program. With pride, CETPA offers you certification through our training partners, enabling you to verify your technical skills unique to your domain. Obtaining certification from these well-known firms can enable you to land your ideal career.

- Gives a competitive advantage over others while searching for a job.

- Ensures knowledge and skills are up to date and can be applied to the job.

- Provides credibility to those looking for a career in the IT field.

- Offers fast track to career advancements.

- Demonstrates a level of competency.

- Offers professional credibility and demonstrates an individual’s dedication and motivation to professional development.

- Helps individuals stand out from the crowd to be successful in their positions.

- Represents a well-recognized and valued IT credential that increases marketability and competitive edge.

- Provides peace of mind with the confidence that certified employees have truly learned the skills necessary to do their jobs;

- Expresses valuable credentials to search for in prospective employees, and can help retain top performers when offered as an incentive;

- Offers a competitive advantage when the team is trained and certified regularly.

Talk To Advisor

MODE/SCHEDULE OF TRAINING:

| Delivery Mode | Location | Course Duration | Schedule (New Batch Starting) |

|---|---|---|---|

| Classroom Training (Regular/ Weekend Batch) | *Noida/ *Roorkee/ *Dehradun | 4/6/12/24 Weeks | New Batch Wednesday/ Saturday |

| *Instructor-Led Online Training | Online | 40/60 Hours | Every Saturday or as per the need |

| *Virtual Online Training | Online | 40/60 Hours | 24x7 Anytime |

| College Campus Training | India or Abroad | 40/60 Hours | As per Client’s need |

| Corporate Training (Fly-a-Trainer) | Training in India or Abroad | As per need | Customized Course Schedule |

Course Content

- What is RDBMS?

- What is Big Data?

- Problems with the RDBMS and other existing systems

- Requirement for the new approach

- Solution to the problem with huge

- Difference between relational databases and NoSQL type databases

- Need of NoSQL type databases

- Problems in processing of Big Data with the traditional systems

- How to process and store Big Data?

- Where to use Hadoop?

- What is Hadoop?

- Why to use Hadoop?

- Architecture of Hadoop

- Difference between Hadoop 1.x and Hadoop 2.x

- What is YARN?

- Advantage of Hadoop 2.x over Hadoop 1.x

- Use cases for using Hadoop

- Components of Hadoop

- Hadoop Distributed File System (HDFS)

- Map Reduce

- Components of HDFS

- What was the need of HDFS?

- Data Node, Name Node, Secondary name Node

- High Availability and Fault Tolerance

- Command Line interface

- Data Ingestion

- Hadoop Commands

- Installation of Hadoop

- Understanding the Configuration of Hadoop

- Starting the Hadoop related Processes

- Visualization of Hadoop in UI

- Writing the files to the HDFS

- Reading the files from the Hadoop Cluster

- Work flow of the JoB

- What is HBASE?

- Why HBASE is needed?

- HBASE Architecture and Schema Design

- Column Oriented and Row Oriented Databases

- HBASE Vs RDBMS

- Overview of the Map Reduce

- History of Map Reduce

- Flow of Map Reduce

- Working of Map Reduce with simple example

- Difference Between Map phase and Reduce phase

- Concept of Partition and Combiner phase in Map Reduce

- Submission of a Map Reduce job in Hadoop cluster and it’s completion

- File support in Hadoop

- Achieving different goals using Map Reduce programs

- What is Sqoop ?

- Use Case for Sqoop?

- Configuring Sqoop

- Importing and Exporting Data using Sqoop

- Importing data into Hive using Sqoop

- Code Generation using sqoop

- Using Map Reduce with the Sqoop

- Introduction to Apache Pig

- Architecture of Apache Pig

- Why Pig?

- RDBMS Vs Apache PIG

- Loading data using PIG

- Different Modes of execution of PIG Commands

- PIG Vs Map Reduce coding

- Diagnostic operations in Pig

- Combining and Filtering Operations in Pig

- What is Flume?

- Architecture of Flume

- Why we need Flume?

- Problem with traditional export method

- Configuring Flume

- Different Channels in Flume

- Importing data using Flume

- Using Map Reduce with the Flume

- Introduction to HIVE

- Architecture of HIVE

- Why HIVE?

- RDBMS Vs HIVE

- Introduction to HiveQL

- Loading data using HIVE

- HIVE Vs Map Reduce Coding

- Different functions supported in HIVE

- Partitioning, Bucketing in HIVE

- Hive Built-In Operators and Functions

- Why do we need Partitioning and Bucketing in HIVE?

- What is MongoDB?

- Difference between MongoDB and RDBMS

- Advantages of MongoDB over RDBMS

- Installing MongoDB

- What are Collections and Documents?

- Creating Databases and Collections.

- Working with Databases and Collections

- Introduction to R Language

- Introduction to R Studio

- Why to use R?

- R Vs Other Languages

- Using R to analyze the data extracted using Map Reduce

- Introduction to ggplot package

- Plotting the graphs of the extracted data from Map Reduce using R

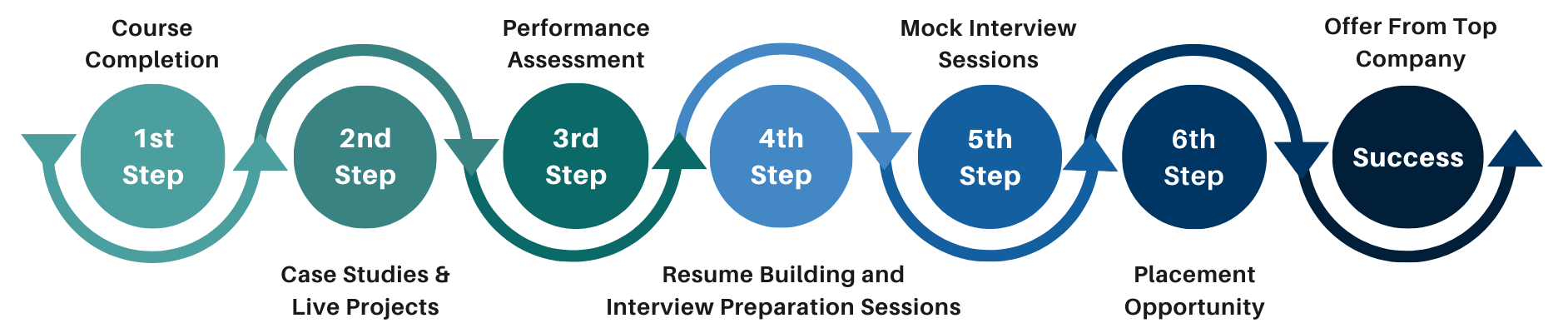

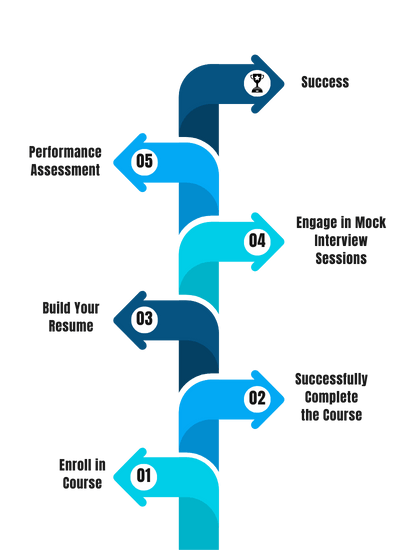

Our Process

FAQ'S

- Classroom Training

- Online Training

- Corporate Training

- On campus Training

Career Assistance

- Build an Impressive Resume

- Attend Mock-Up Interviews with Experts

- Get Interviews & Get Hired

Training Certification

Earn your certificate

Your certificate and skills are vital to the extent of jump-starting your career and giving you a chance to compete in a global space.

Share your achievement

Talk about it on Linkedin, Twitter, Facebook, boost your resume or frame it- tell your friend and colleagues about it.

Upcoming Batches

What People Say

Our Partners

Need Customized Curriculum? Request Now

Structure your learning and get a certificate to prove it.

Our Clients

Our Placed Students

Related Courses

Review Us

Suhana Sharma

![]() 17 Feb 2024

17 Feb 2024

Big Data Hadoop Training In Noida

Cetpa is the best training company for big data hadoop. I am saying this because of my excellent experience with cetpa they provide globally certified big data hadoop training along with assured placement assistance. If you want to learn hadoop by working on live project,then opt for cetpa.

Course Features

- Lectures 0

- Quizzes 0

- Duration 10 weeks

- Skill level All levels

- Language English

- Students 0

- Assessments Yes